ParseHub allows you to scrape a list of URLs. The list of URLs (starting values) can be updated either on the ParseHub application or through our API for each run.

In this article we will show you an alternative way for updating the list of URLs that you are scraping without interacting with the application or with the API. This alternative involves using Google sheets to provide a list of updated URLs to scrape. This way, you can update the URLs easily and ParseHub will use the most up to date list to run the project.

Please note that ParseHub will not start two runs with the same starting site/starting value at the same time. If you wish to start two runs for different set of URLs simultaneously, we recommend creating two different Google sheets and starting the project for these two sheets by changing the starting site on the run’s/project’s settings.

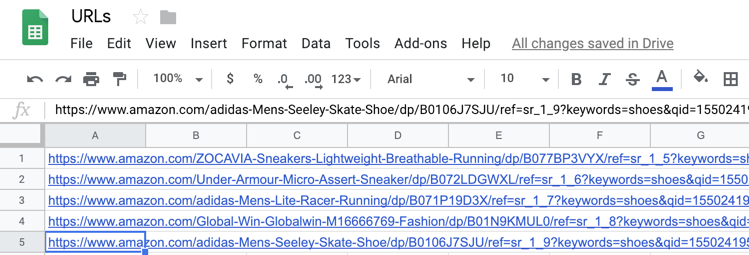

Creating a Google Sheet:

1. Login to your Google account and choose Drive from the menu on the top-right corner.

2. Click on NEW and choose "Google Sheets".

3. Rename the Google sheet to whatever you like, and enter your list of URLs on this sheet.

4. To make this Google sheet accessible to the public, and therefore to ParseHub, you will need to publish it. Click on the "File" from the menu and choose "Publish to the Web".

5. A pop up will appear which allows you to choose the publish options and publish the sheet.

6. Once you confirm that you want to publish the sheet, Google generates a URL for your sheet which you can use in the ParseHub project. Please copy this link and save it for the next section.

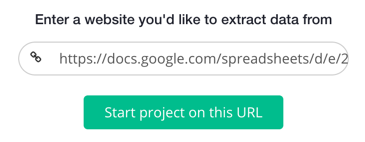

Building the project on ParseHub:

1. Choose "Create a new project" from the toolbar and enter the Google sheet URL that you copied from the previous section.

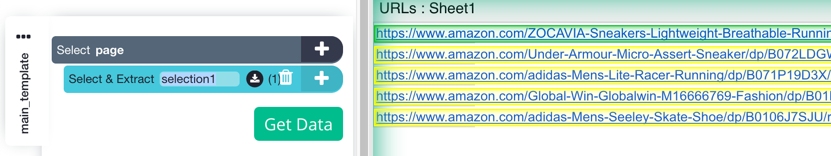

2. If not already in select mode, click on the "Select page" command + button that is located on the right of the command and choose the "Select" tool from the tool menu. Select the first URL on the page.

3. "Select" the second URL from the list (highlighted in yellow) to select all the URLs. If this selection is not selecting all the URLs, you can click on more yellow-highlighted URLs to train ParseHub on more selections.

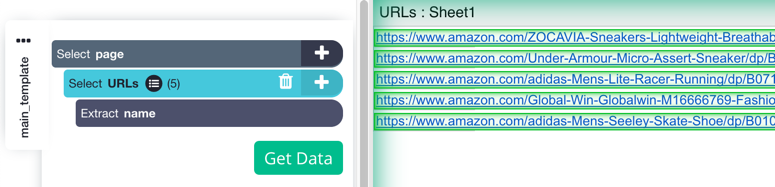

Once you selected all the URLs, ParseHub will create Begin New Entry (hidden under list icon ![]() ), Extract name and Extract url commands automatically. You can keep the Extract name command in order to additionally extract the text of each URL associated with the data in the final results.

), Extract name and Extract url commands automatically. You can keep the Extract name command in order to additionally extract the text of each URL associated with the data in the final results.

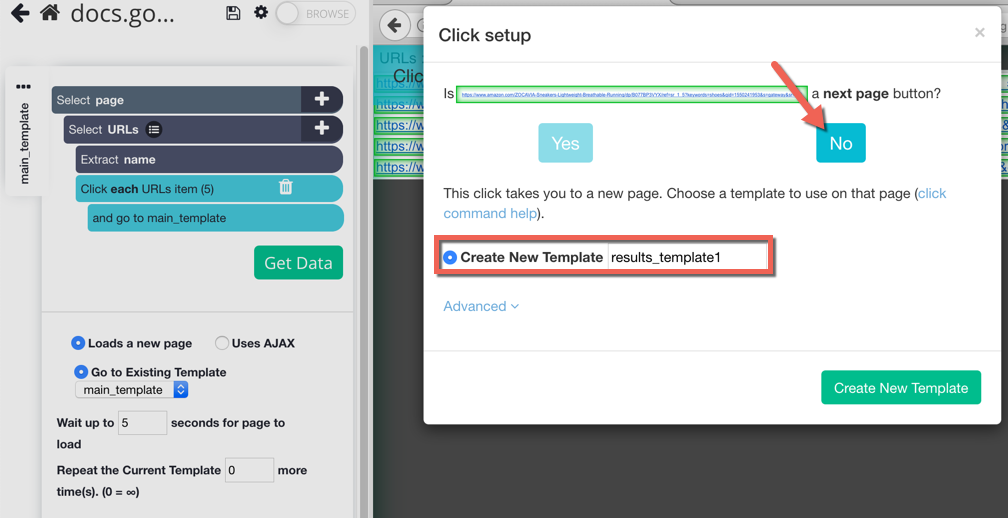

4. Click on the + button next to the Select URLs and choose the "Click" command. The Click command's configuration pop up will appear, choose to create a new template and enter a name for the template.

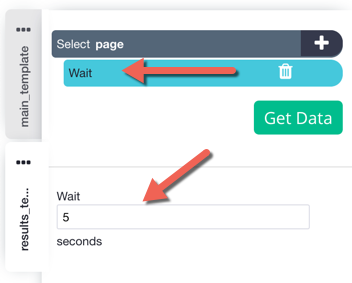

5. ParseHub will take you to the first URL and will load the page. Since Google redirects you to the URL, normally it takes a bit longer than usual for the web page to load. Therefore you want to make sure to add a "Wait" command to this new template. Please make sure to set up the wait command for 5 seconds:

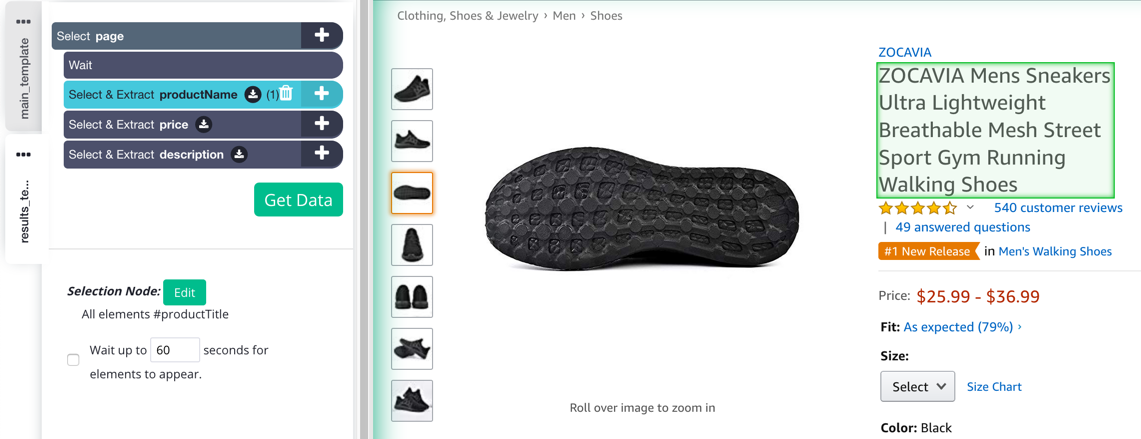

6. On this new template, you can select the product details or any elements that you want to scrape. This template will be repeated for all the URLs on the Google sheet. Note that since we selected all the URLs in the previous template, ParseHub will scrape all the URLs from the Google sheet.

Whenever you update the Google sheet, the changes will be immediately reflected in the public URL associated with the sheet. This means that whenever you re-run the project, you will be scraping the results for the most up-to-date URLs.

If you need more help with your project, please email us at hello@parsehub.com. We would be happy to help you.