When you have a Click command that clicks on multiple links (ex. scraping details from several products, scraping products from categories), the links it clicks on usually have their own URLs. This makes it easy for ParseHub to open these pages in new "tabs" and continue clicking on links from the initial webpage. However, sometimes these links load information on the current page using something that is called an AJAX call (ie. the URL doesn't change). ParseHub runs into trouble on websites like this using our regular methods because clicking on these links does not change the URL, but the HTML structure of the webpage looks completely different. After this click, ParseHub is not able to refer to the initial page where it clicked on and there is no URL attached to it. As a result, ParseHub is not able to find the next element/link to click on and continue the scrape.

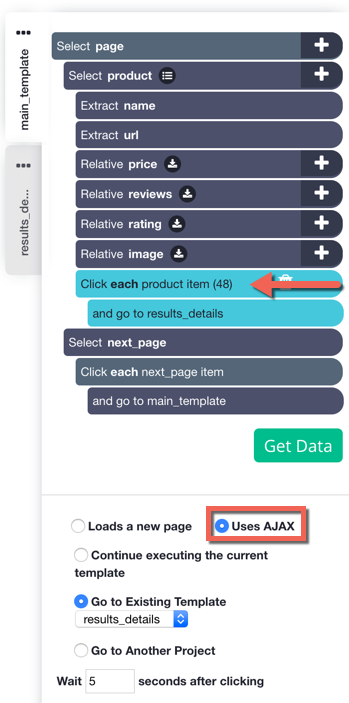

This problem is most evident when you actually run your project - ParseHub will scrape the first details page, but no pages after that. You'll also see that the settings under your Click command will be set to "Uses AJAX" instead of "Loads a new page".

However, you can work around this and get ParseHub looping through pages, even if the website is loading information dynamically using AJAX.

To summarize, in this tutorial we will create a loop which will reload (go to template command) the main page for each item/element and will click on each and every one of them on the main page.

We will be using the leasing page on the Evans Halshaw website, a car buying/leasing website in the UK. We will be sorting the listings by car make one-by-one, and then scraping the car listings that appear on the page.

Looping through AJAX clicks

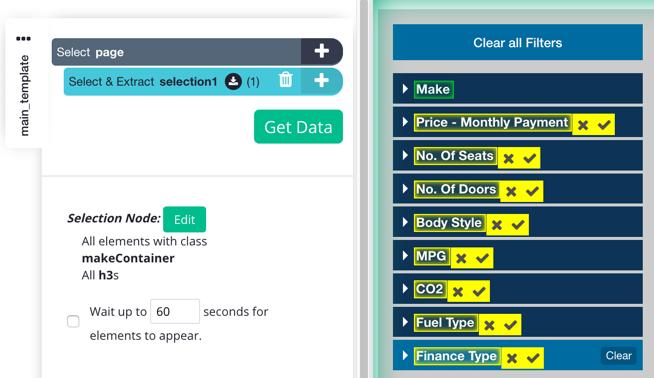

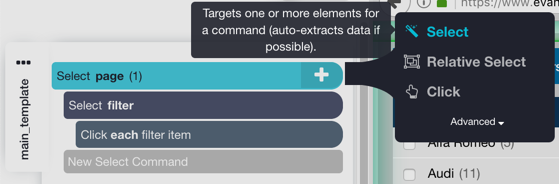

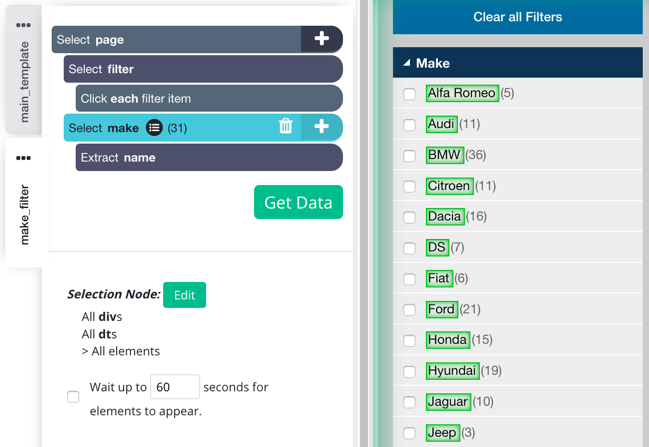

1. First, we want to extract the text of the categories (makes) that we will be scraping, since sorting by car makes uses AJAX clicks. Using the Select command that is automatically generated for you, click on the "Make" filter on the left-hand side of the website to select it.

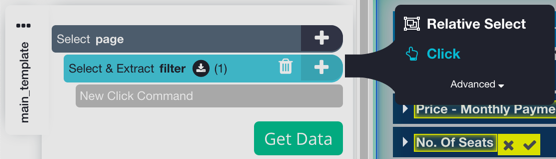

2. Rename your Select command to "filter" by clicking on it, and then click on the plus button next to "Select & Extract filter". Choose a Click command from the toolbox.

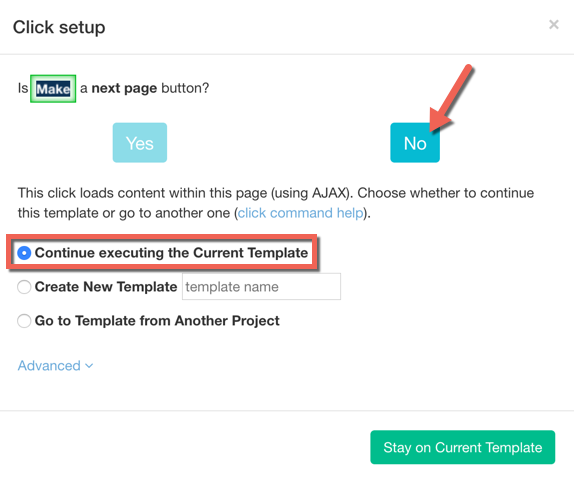

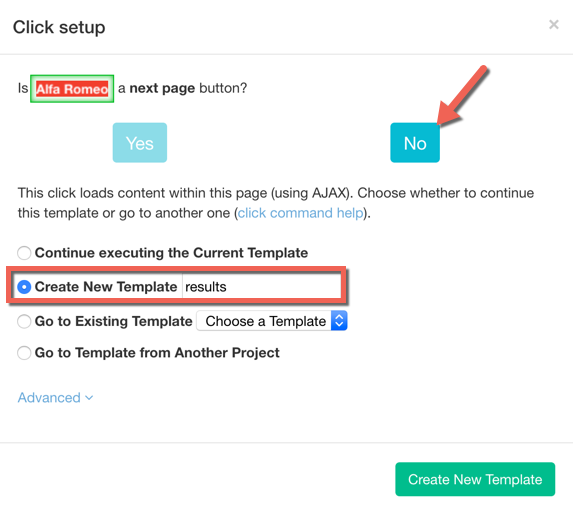

3. When the Click command's configuration window pops up, choose "No" when asked if this is a next page button, and then continue executing the current template.

4. Click on the plus button next to "Select page", and choose a Select command.

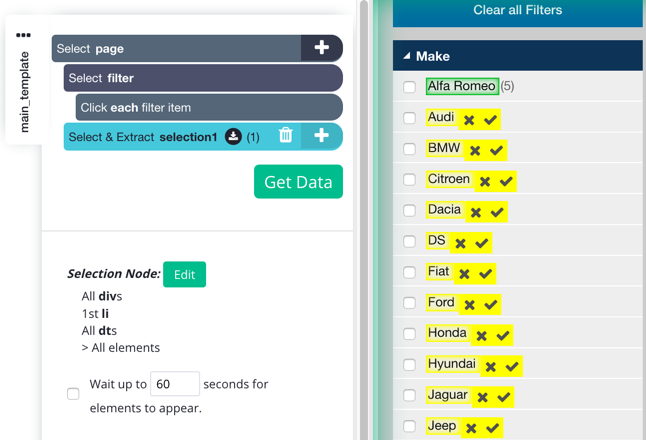

5. Using the Select command made in step 4, click on the first car make to select it. It will get highlighted in green while similar elements will be highlighted in yellow.

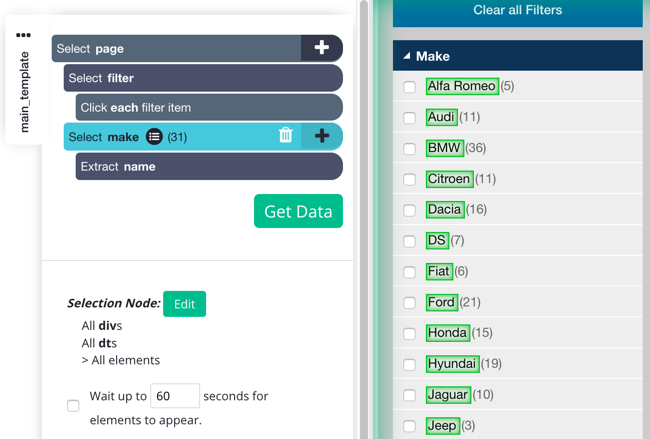

6. Now, click on a second car make. They will all now be highlighted in green. Rename your select command to "make".

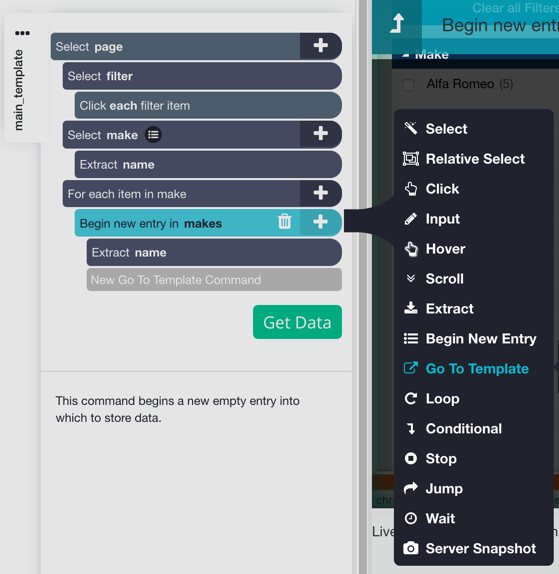

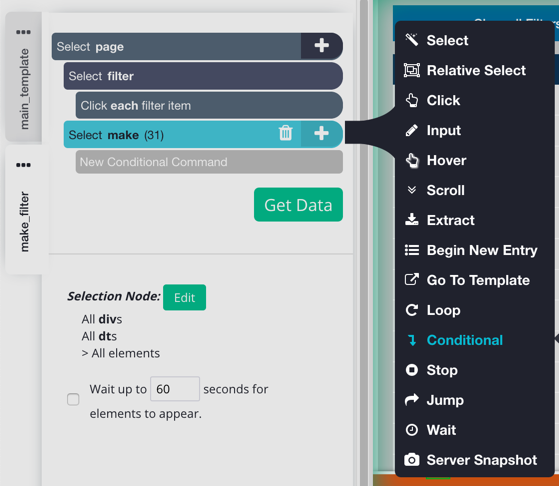

7. Click on the plus button next to "Select page", click on advanced, and then choose a Loop command from the toolbox.

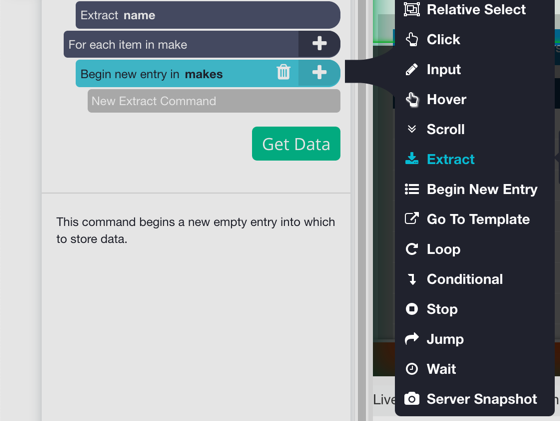

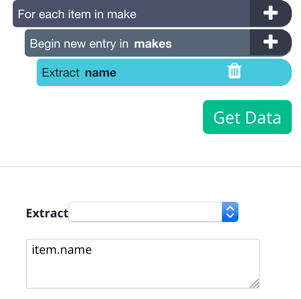

8. In the "List" box, type in "make". Now, we want to start a new line entry for every category that we are going to scrape in our loop. To do this, click on the plus button next to your Loop command, and choose a Begin new entry command. Name this command to "makes"

9. To actually scrape the category names, we will need to add an Extract command to our project. Do this by clicking on the plus button next to your Begin new entry command. You can also rename this command, and for this example, we've named it "name".

In the Extract command's settings, type in "item.name" into the extraction text box. This refers to each car make scraped from the "Select make" command.

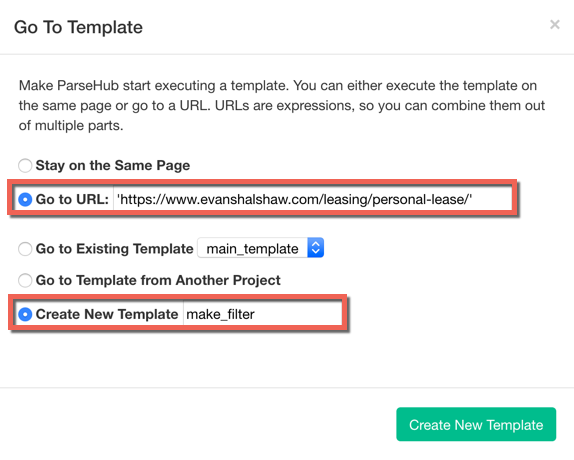

10. Now that we have created a new line for each brand we are scraping, we want to open up a new page on the website for every category. Click on the plus button next to your Begin new entry command and choose a Go to template command.

11. In the Go to template command's configuration window, type in the URL of the page that we want to loop on in quotation marks (the URL that we started the project on, in this case) and create a new template. We've named the template "make_filter" in this example.

This Loop will open a brand new page on the Evans Halshaw website for every make that was extracted from the filter.

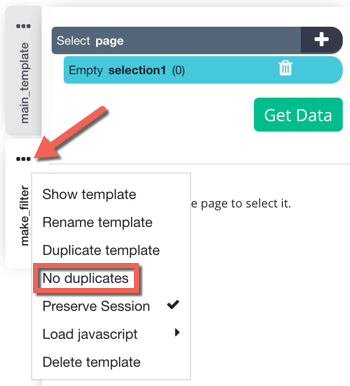

12. Before we add any commands to our make_filter template, we are going to change one of its settings. Click on the three dots above the make_filter template and disable "No duplicates". When this is enabled, ParseHub avoids scraping pages that it has already visited. Since we've already visit the leasing page in our main_template, we will need to disable this setting on the make_filter template in order for the commands on it to be executed.

13. On your make_filter template, follow the same steps that you did previously to expand the make filter and select all of the makes. I renamed our second selection to "makes" instead of "make" on this template to differentiate it from the Select command on the main_template.

14. Click on the list icon ![]() next to "Select make" and delete the Begin new entry command that appears. Now, click on the plus button next to "Select make", click on advanced, and choose a Conditional command.

next to "Select make" and delete the Begin new entry command that appears. Now, click on the plus button next to "Select make", click on advanced, and choose a Conditional command.

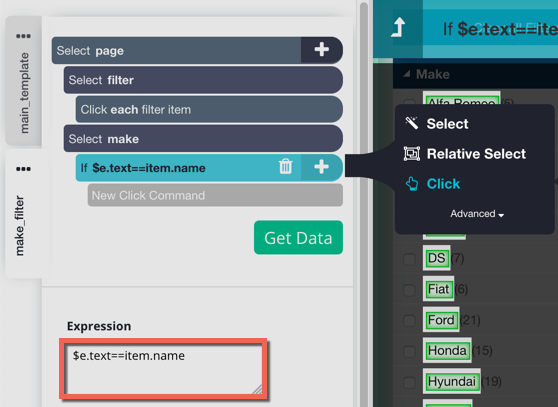

15. In your conditional command, type in '$e.text==item.name'. Now click on the plus button next to your Conditional command, and choose a Click command.

16. In the Click command's configuration window, choose "No" when asked if this is a next page button, and create a new template. We have named this new template "results"

This set up will check the list of makes that we are selecting against each item in the list of makes that we extracted earlier. If the text strings match each other, than the element will be clicked on for that particular item in the list of makes.

This new template will scrape information for the actual car listings. We can now follow the instructions in this tutorial on how to scrape product details.